There is no doubt that AI is disrupting the way we create and trust digital content.

One area I am seeing an increase in AI being used in the event industry is content submission by prospective speakers.

At Commsverse 2024 we received over 300 session submissions and with just 60 content slots available, the competition for those places was intense.

This made our session evaluation even more important to get right.

But unlike previous years where session abstracts were varied in the way they were written, this year we had 57 sessions submitted that had been created using AI without any editing from the submitter.

This made our evaluation task even harder this year than it has ever been.

As an event organizer, I have a responsibility to ensure that the content put on offer at my event is:

There is nothing worse to an attendee that being pitched an amazing session that promises great learning potential, only to find out the speaker cannot actually deliver on the promises their session description portrayed.

To the attendee they feel let down and disappointed in the speaker, but also by the event organizers for selecting the content in the first place.

This leads to negative feedback and in the worst possible case, impacts their decision to return to your event in the future.

It’s ultimately not great for the speaker either. They might think that AI assisted them in getting a session selected at an event, but actually, unless they are confident they can deliver on the promises, this leaves then vulnerable at delivery time.

For a speaker, there is nothing worse than struggling through a session and watching their audience disengage, lose interest, and walk out.

Fundamentally the speaker must shoulder most of the blame for these types of session outcomes. They chose to put their faith into AI to generate them a session abstract that sounded great.

But failed to check if they could deliver on output AI produced or if indeed it was an accurate description of their session topic and outcome.

Some of the blame must sit with the organizer, or the people who are evaluating sessions.

They may not be subject matter experts on the topic so can fail to spot inconsistencies, but they can sense check submissions to determine whether what is being proposed can actually be delivered by the speaker in the time permitted.

The first clue we had that submissions were generated by AI was the way they are written. The grammar that is used and the formatting / construction of the submission is a key giveaway.

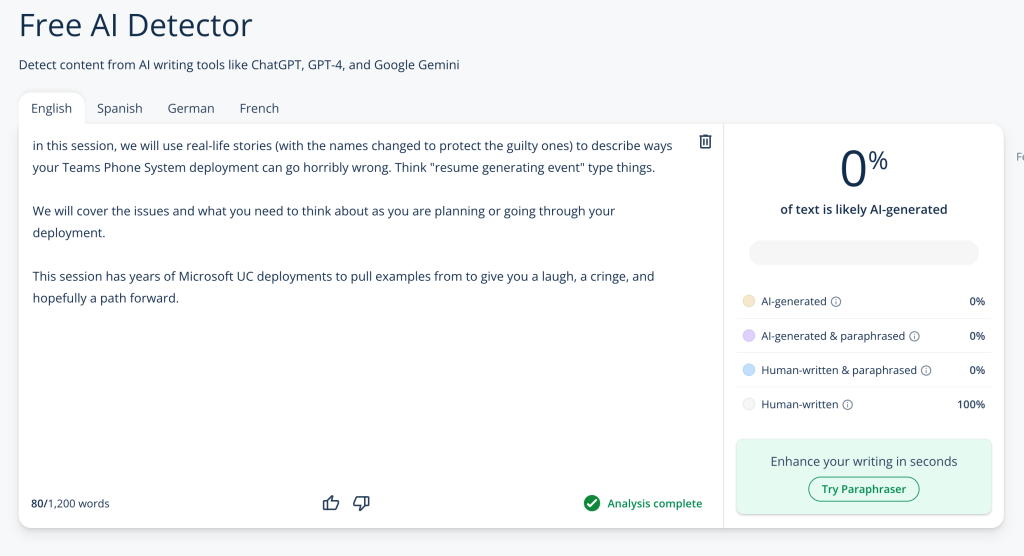

A typical content submission written by a human looks something similar to this:

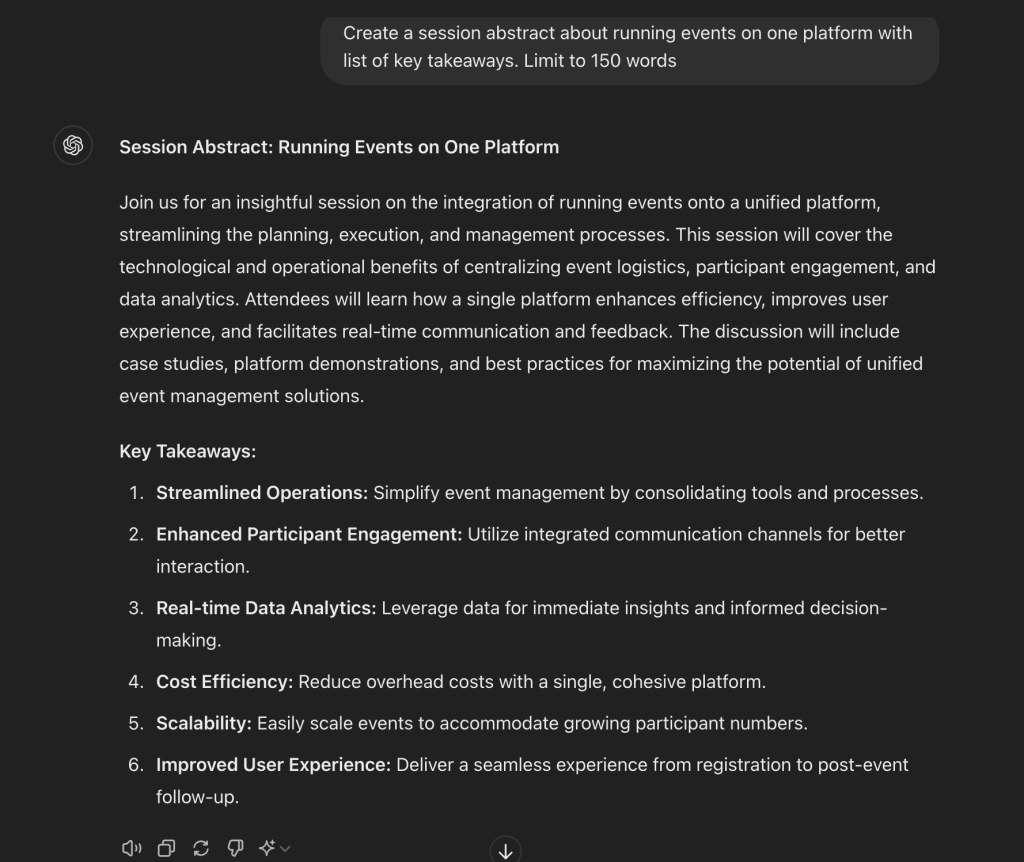

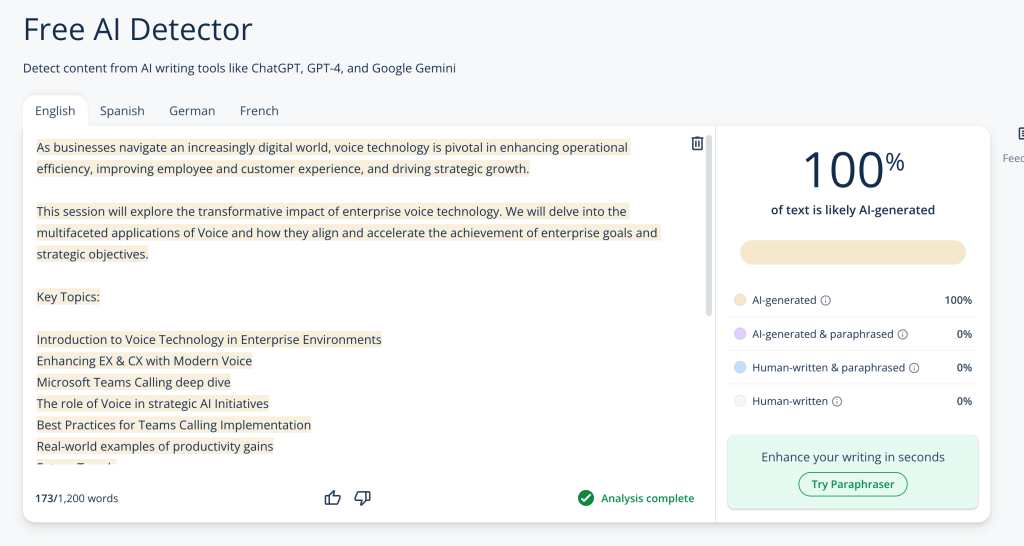

But AI generated submissions are suspiciously perfectly curated, like this:

There were a few alarm bells with this submission that raised our concern on its validity as a genuine sesson:

The main objection we had was that in the duration available for this session being only 25-minutes long, there is no way even the world’s best speakers could deliver this session even to 10% of what the description promised in that timeframe.

Even in 45-minutes, this type of session would be hard to execute.

You might say well this is your bias and interpretation, maybe the speaker genuinely wrote this themselves?

I did put my theory to the test by asking the one thing that would be able to tell me if it was generated by AI, and that was AI itself aka Chatgpt.

I asked chatgpt “what the probability was that the following text is generated by AI as a percentage”.

Let’s have a look at the genuine session submission first.

As we can see, we’re 100% confident that this content was created by a human.

Now for the content we suspected was generated by AI.

Conclusive evidence that AI thinks that this content was created by AI.

But wait, if we can’t trust AI then how can we trust the result? Well, after our call for content was completed and our sessions chosen, I was contacted by the submitter of this session to ask why it hadn’t been chosen.

I responded with my reasoning that the session description appeared to be created by AI without any sanity checking on whether they could deliver on the session promises in the time given. Therefore, their session was rejected.

The speaker candidly admitted that they did use AI to create the session submission and on reflection they agreed that it seemed overzealous.

In a word, No.

AI is a great tool for ideation and giving you a starting point to work from. The hardest part of any story is the idea and the first sentence.

AI gives you that assistance in kick starting your ideation process and setting you off on the right track. Using it as a framework for your content submission gives you a better chance of standing out in a crowded submission pool.

But using it wholly and on its own, taking its output and pasting it directly into your submission and hoping to be selected is going to let you, your audience, and the event as a whole down if it is inaccurate or undeliverable.

You should take the content as a baseline to set your session objective from, check that it is factually correct, that you can deliver on the promises effectively within the timeframe, and it appears genuine to your target audience.

You should edit it, reword or rewrite sections that improve its submission quality so it hits everything content reviewers are looking for and also when read by your target audience, resonates with them.

Circling back to the AI example in this post. Had the submitter spent 5 minutes reading the AI content and refining it into a description that made sense and felt deliverable, they could’ve been selected.

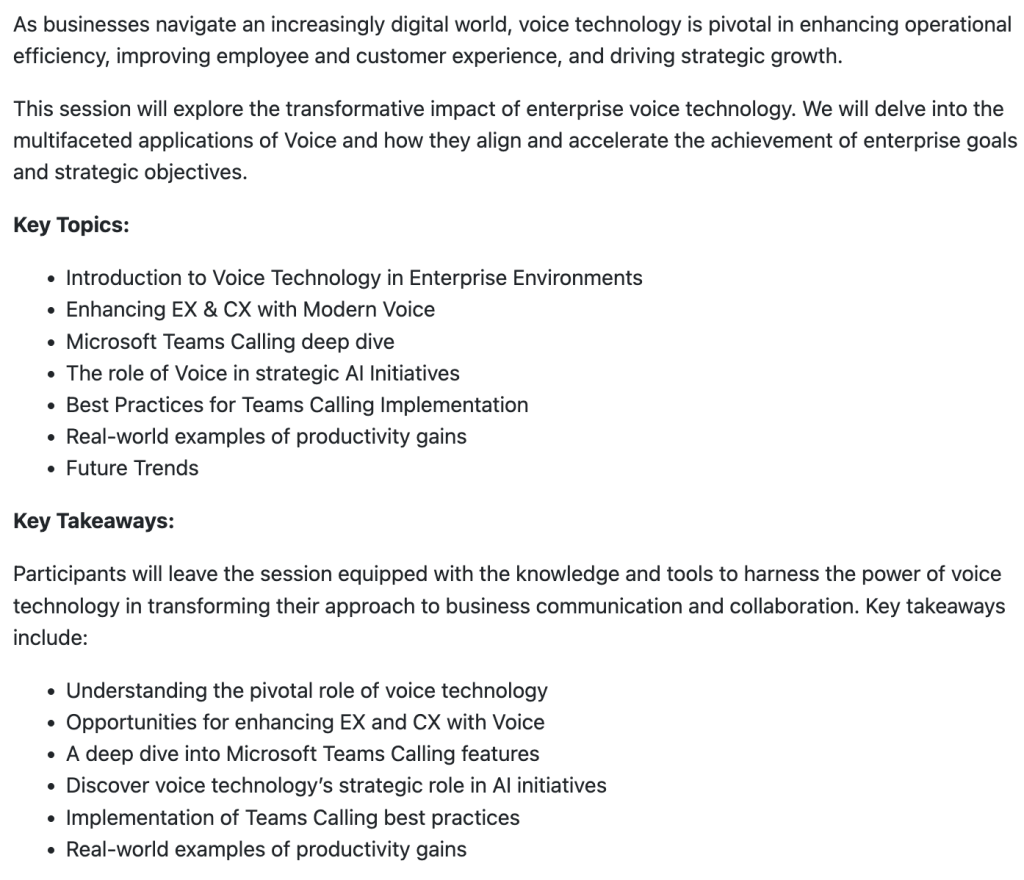

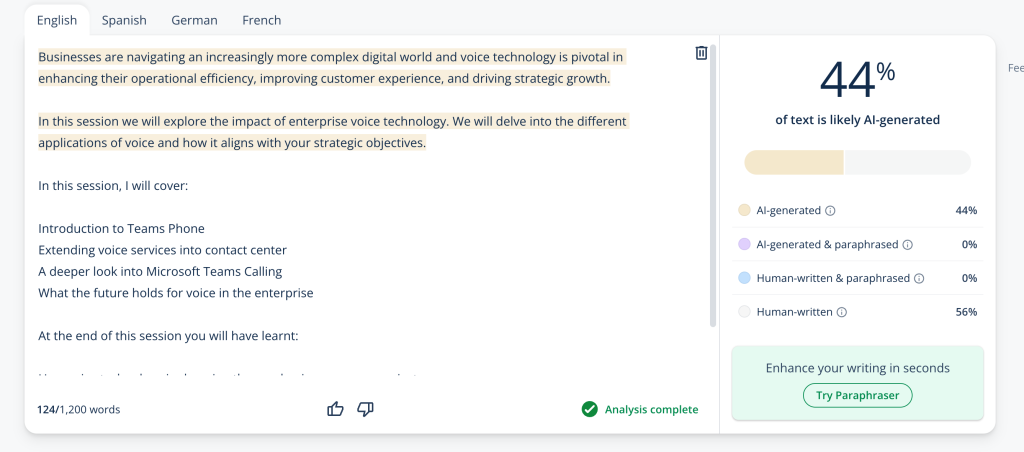

Here I have taken their submission and changed a few words and phrases to focus in on the session topic of Microsoft Teams and simplified the key points and takeaways.

The outcome is that both human and AI have contributed to this session submission.

As an event organizer, evaluating this submission I would have no hesitation on approving because:

You could copy and paste submissions into AI content detectors like I have done here in this article. However, that is going to be laborious and not scaleable.

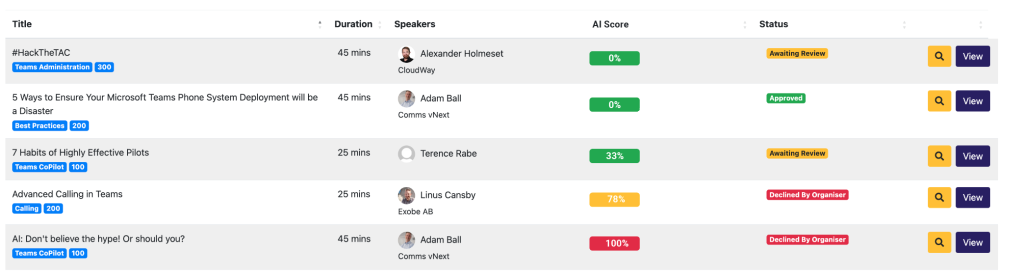

Or you could use Just Attend for your event management and use our AI content detector in the call for speakers module.

The AI detector evaluates each submission to determine whether AI has contributed to the creation of the content, and how much.

You can then use this as a guide when evaluating sessions.

What’s a good balance?

This question is quite hard to answer as it depends based on the topic and the content depth. That said, in my opinion you should accept into consideration any session with an AI score of less than 60%.

If at least 40% of the submission is human input, then they have at least sanity checked the content to make sure they’re comfortable with the submission.

Do 100% human submissions carry more weight? That is up to you, but I don’t think that is necessarily fair judgment.

At the end of the day you as an event organizer want the best content your community has to offer. Some speakers find it hard to write good session abstracts but deliver content excellently, others can write confidently, but speak poorly.

Using AI along with increased intelligence where it has been used and in what quantity offers you as an organizer a better chance at choosing the right sessions.

If you’d like to find out more about our AI content detector for call for content, book your demo below.

Unique Attendees

Sessions Delivered

Exhibitor Leads Generated

Badges

Printed